Inference in Sinusoid Models¶

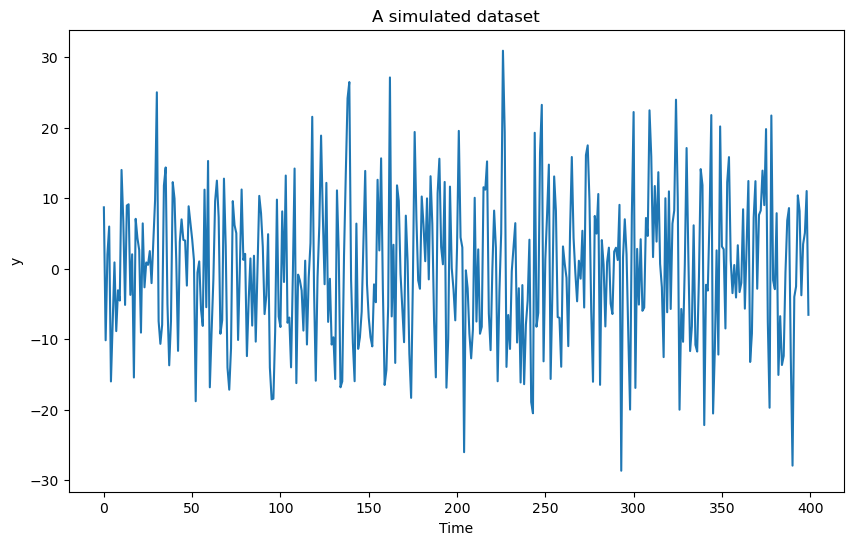

In the last lab, we studied the following problem. Consider the following dataset simulated using the model:

for with some fixed values of and σ. The goal of this problem is to estimate along with associated uncertainty quantification.

Today we will start by addressing the problem of estimating the other parameters also along with uncertainty quantification.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import statsmodels.api as smf = 0.2035

#f = 1.8

n = 400

b0 = 0

b1 = 3

b2 = 5

sig = 10

rng = np.random.default_rng(seed = 42)

errorsamples = rng.normal(loc = 0, scale = sig, size = n)

t = np.arange(1, n + 1)

y = b0 * np.ones(n) + b1 * np.cos(2 * np.pi * f * t) + b2 * np.sin(2 * np.pi * f * t) + errorsamplesplt.figure(figsize = (10, 6))

plt.plot(y)

plt.xlabel('Time')

plt.ylabel('y')

plt.title('A simulated dataset')

plt.show()

To obtain the MLEs of the parameters, we first obtain the MLE of (as before), then plug in , and run linear regression to estimate the other parameters.

def rss(f):

x = np.arange(1, n + 1)

xcos = np.cos(2 * np.pi * f * x)

xsin = np.sin(2 * np.pi * f * x)

X = np.column_stack([np.ones(n), xcos, xsin])

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

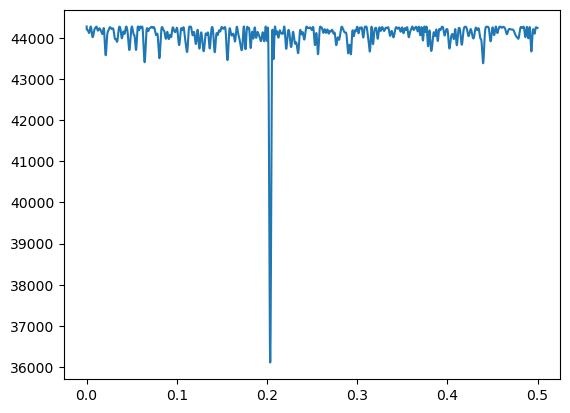

return rss# We can minimize rss(f) over a fine grid of possible f values.

# Since n is not very big here, we can work with a dense grid.

ngrid = 100000

allfvals = np.linspace(0, 0.5, ngrid)

rssvals = np.array([rss(f) for f in allfvals])

fhat = allfvals[np.argmin(rssvals)]

print(fhat)

plt.plot(allfvals, rssvals)

plt.show()0.2035520355203552

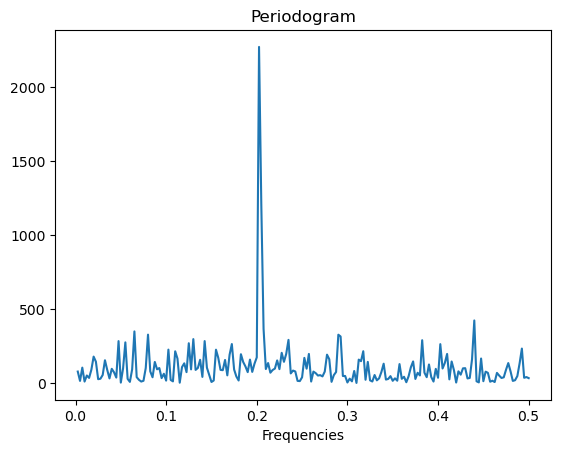

# We can obtain an approximation to the MLE by working with Fourier frequencies and maximizing the periodogram.

fft_y = np.fft.fft(y)

m = n // 2 - 1

fourier_freqs = np.arange(1/n, (1/2) + (1/n), 1/n)

m = len(fourier_freqs)

pgram_y = (np.abs(fft_y[1:(m + 1)]) ** 2)/n

plt.plot(fourier_freqs, pgram_y)

plt.xlabel("Frequencies")

plt.title("Periodogram")

plt.show()

fhat_fft = fourier_freqs[np.argmax(pgram_y)]

print(fhat_fft)

0.2025

Except in rare cases where the true frequency is already a Fourier frequency, the MLE of computed on a fine grid will be closer to the truth compared to the MLE computed on the grid of Fourier frequencies. But the MLE computed on Fourier frequencies is much more computationally cheap.

After computing the MLE of , we can do linear regression (where is fixed the MLE) to compute the MLEs of the coefficient parameters and σ.

x = np.arange(1, n + 1)

xcos = np.cos(2 * np.pi * fhat * x)

xsin = np.sin(2 * np.pi * fhat * x)

Xfhat = np.column_stack([np.ones(n), xcos, xsin])

md = sm.OLS(y, Xfhat).fit()

print(md.params)

# this gives estimates of beta_0, beta_1, beta_2 (compare them to the true values which generated the data)

b0_est = md.params[0]

b1_est = md.params[1]

b2_est = md.params[2]

print(np.column_stack((np.array([b0_est, b1_est, b2_est]), np.array([b0, b1, b2]))))

rss_fhat = np.sum(md.resid ** 2)

sigma_mle = np.sqrt(rss_fhat / n)

sigma_unbiased = np.sqrt((rss_fhat)/(n - 3))

print(np.array([sigma_mle, sigma_unbiased, sig]))

# sig is the true value of sigma which generated the data[-0.05332889 3.00407151 5.63909328]

[[-0.05332889 0. ]

[ 3.00407151 3. ]

[ 5.63909328 5. ]]

[ 9.50176964 9.53760297 10. ]

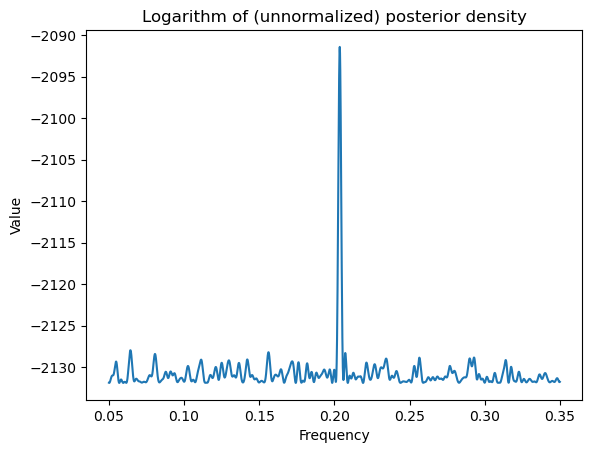

Now let us use Bayesian inference for uncertainty quantification. The Bayesian posterior for is:

where and denotes the determinant of .

It is better to compute the logarithm of the posterior (as opposed to the posterior directly) because of numerical issues.

def logpost(f):

x = np.arange(1, n+1)

xcos = np.cos(2 * np.pi * f * x)

xsin = np.sin(2 * np.pi * f * x)

X = np.column_stack([np.ones(n), xcos, xsin])

p = X.shape[1]

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

sgn, log_det = np.linalg.slogdet(np.dot(X.T, X))

# sgn gives the sign of the determinant (in our case, this should 1)

# log_det gives the logarithm of the absolute value of the determinant

logval = ((p - n)/2) * np.log(rss) - 0.5 * log_det

return logvalWhile evaluating the log posterior on a grid, it is important to make sure that we do not include frequencies for which is singular. This will be the case for and . When is very close to 0 or 0.5, the term will be very large because of near-singularity of . We will therefore exclude frequencies very close to 0 and 0.5 from the grid while calculating posterior probabilities.

From the Periodogram plotted above, it is clear that the maximizing frequency is around 0.2. So we will take a grid that around 0.2 (such as 0.05 to 0.35)

ngrid = 10000

allfvals = np.linspace(0.05, 0.35, ngrid)

print(np.min(allfvals), np.max(allfvals))

logpostvals = np.array([logpost(f) for f in allfvals])

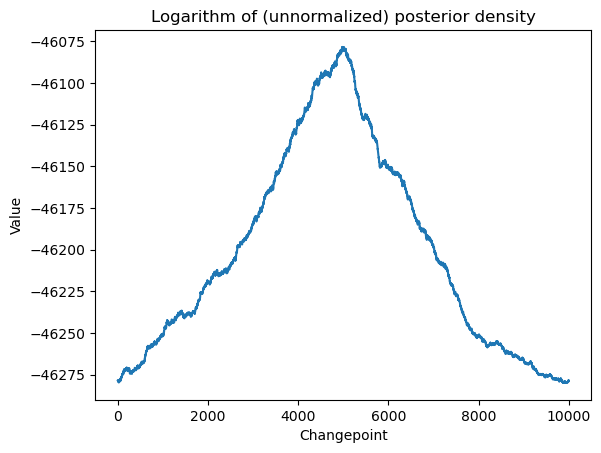

plt.plot(allfvals, logpostvals)

plt.xlabel('Frequency')

plt.ylabel('Value')

plt.title('Logarithm of (unnormalized) posterior density')

plt.show()0.05 0.35

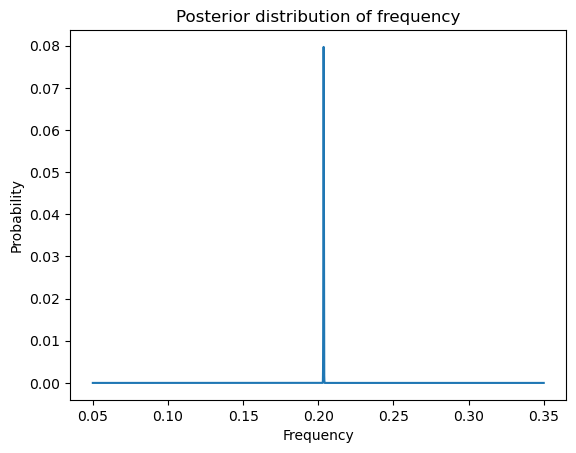

Note that the logarithm of the posterior looks similar to the periodogram. But the posterior itself will have only one peak (which dominates all other peaks).

postvals_unnormalized = np.exp(logpostvals - np.max(logpostvals))

postvals = postvals_unnormalized / (np.sum(postvals_unnormalized))

plt.plot(allfvals, postvals)

plt.xlabel('Frequency')

plt.ylabel('Probability')

plt.title('Posterior distribution of frequency')

plt.show()

We can draw posterior samples from f as follows.

N = 2000

fpostsamples = rng.choice(allfvals, N, replace = True, p = postvals)Given a posterior sample of , a posterior sample of σ can be drawn (this result was proved in Problem 4 of Homework one):

Further, given posterior samples from and σ, a posterior sample from is drawn using:

This is done in code as follows.

post_samples = np.zeros(shape = (N, 5))

post_samples[:,0] = fpostsamples

for i in range(N):

f = fpostsamples[i]

x = np.arange(1, n + 1)

xcos = np.cos(2 * np.pi * f * x)

xsin = np.sin(2 * np.pi * f * x)

X = np.column_stack([np.ones(n), xcos, xsin])

p = X.shape[1]

md_f = sm.OLS(y, X).fit()

chirv = rng.chisquare(df = n - p)

sig_sample = np.sqrt(np.sum(md_f.resid ** 2) / chirv) # posterior sample from sigma

post_samples[i, (p + 1)] = sig_sample

covmat = (sig_sample ** 2) * np.linalg.inv(np.dot(X.T, X))

beta_sample = rng.multivariate_normal(mean = md_f.params, cov = covmat, size = 1)

post_samples[i, 1:(p + 1)] = beta_sample

print(post_samples)[[ 0.20340534 -0.56189444 3.28165364 5.35964685 9.18963362]

[ 0.20367537 -1.22669044 3.16545782 6.3549404 9.63517389]

[ 0.20343534 -0.18052831 3.81020787 5.08243908 9.38448971]

...

[ 0.20355536 -0.24135565 2.17378505 5.44757671 9.50053706]

[ 0.20355536 -0.37830076 2.90179999 6.50300091 9.49852951]

[ 0.20337534 -0.52307159 4.11483084 4.68294379 9.84908592]]

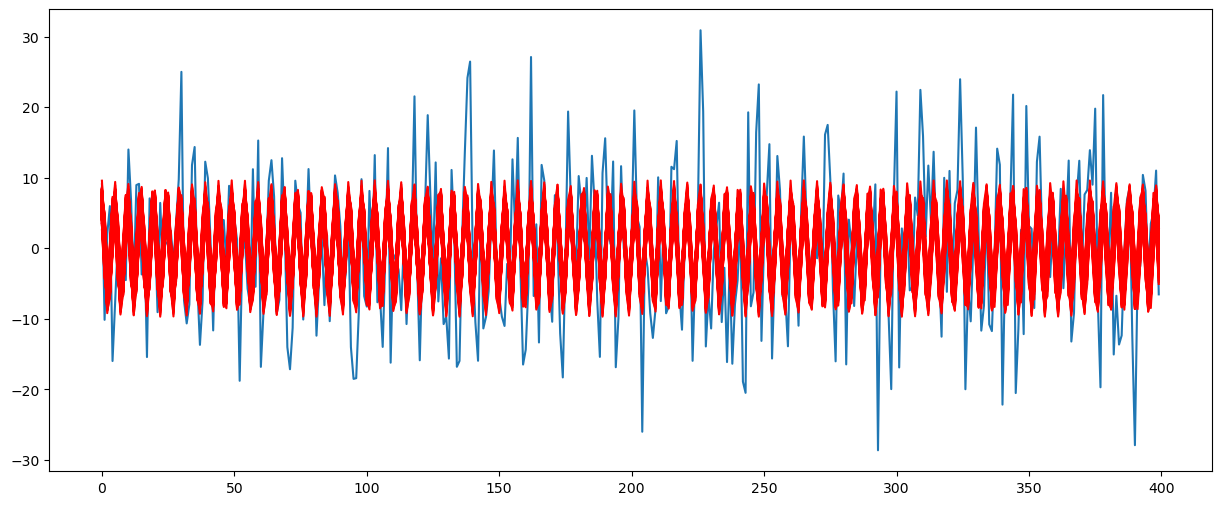

Let us plot the posterior functions along with the original data to visualize uncertainty.

x = np.arange(1, n + 1)

plt.figure(figsize = (15, 6))

plt.plot(y)

for i in range(N):

f = fpostsamples[i]

b0 = post_samples[i, 1]

b1 = post_samples[i, 2]

b2 = post_samples[i, 3]

ftdval = b0 + b1 * np.cos(2 * np.pi * f * x) + b2 * np.sin(2 * np.pi * f * x)

plt.plot(ftdval, color = 'red')

A simple summary of the posterior samples can be obtained as follows.

pd.DataFrame(post_samples).describe()

# note that the true value of f is 0.2035, b0 is 0, b1 is 3, b2 is 5 and sigma is 10Change-point Model¶

The same method can be used for inference in other nonlinear models. For example, consider the following model:

This is known as a change-point model. The parameter is called the change-point. is the indicator function which takes the value 1 if and 0 otherwise. The function equals for times and equals for times . Therefore this model states that the level of the time series equals until a time at which point it switches to . The value of is therefore called the changepoint. From the given data , we need to infer the parameter as well as . The unknown parameter makes it a nonlinear model. If were known, this will become a linear regression model with -matrix given by

Inference for the parameter proceeds just like before. We first compute RSS():

and then minimize over to obtain the MLE of . After finding , we can find the MLEs of the other parameters as in linear regression with known .

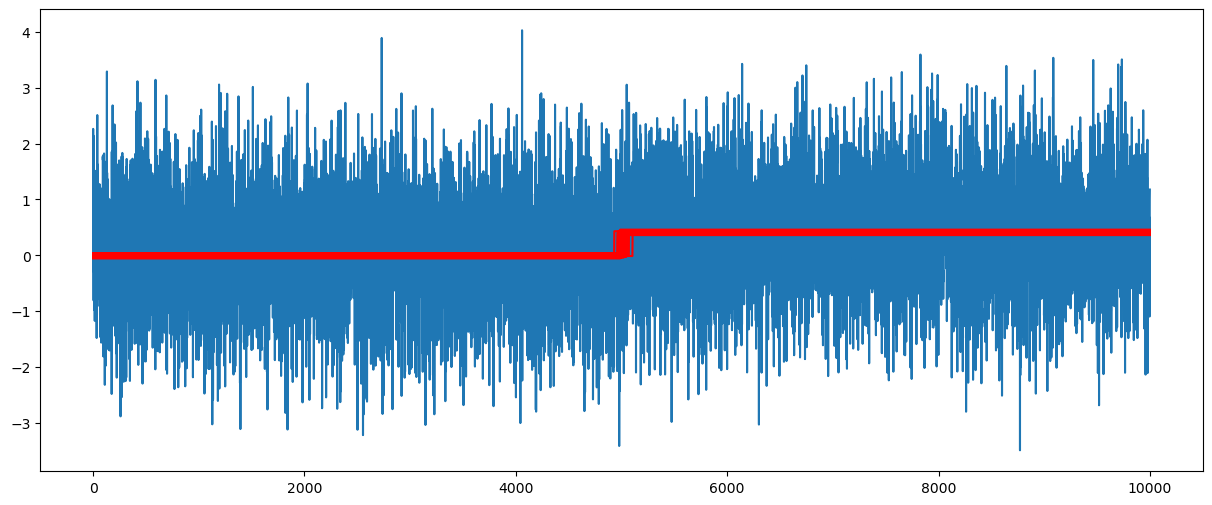

# Here is a simulated dataset having a change point:

n = 10000

mu1 = 0

mu2 = 0.4

dt = np.concatenate([np.repeat(mu1, n / 2), np.repeat(mu2, n / 2)])

sig = 1

errorsamples = rng.normal(loc = 0, scale = sig, size = n)

y = dt + errorsamplesplt.figure(figsize = (15, 6))

plt.plot(y)

plt.show()

def rss(c):

x = np.arange(1, n + 1)

xc = (x > c).astype(float)

X = np.column_stack([np.ones(n), xc])

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

return rssallcvals = np.arange(5, n - 4)

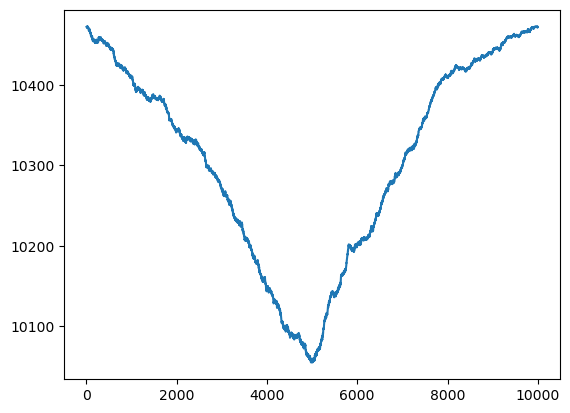

# we are ignoring a few points at the beginning and at the end

rssvals = np.array([rss(c) for c in allcvals])plt.plot(allcvals, rssvals)

plt.show()

c_hat = allcvals[np.argmin(rssvals)]

print(c_hat)4981

# Estimates of other parameters:

x = np.arange(1, n + 1)

xc = (x > c_hat).astype(float)

X = np.column_stack([np.ones(n), xc])

md = sm.OLS(y, X).fit()

print(md.params)

# this gives estimates of beta_0, beta_1 (compare them to the true values which generated the data)

b0_est = md.params[0]

b1_est = md.params[1]

b0_true = mu1

b1_true = mu2 - mu1

print(np.column_stack((np.array([b0_est, b1_est]), np.array([b0_true, b1_true]))))

rss_chat = np.sum(md.resid ** 2)

sigma_mle = np.sqrt(rss_chat / n)

sigma_unbiased = np.sqrt((rss_chat)/(n - 2))

print(np.array([sigma_mle, sigma_unbiased, sig]))

#sig is the true value of sigma which generated the data[0.01969268 0.40873694]

[[0.01969268 0. ]

[0.40873694 0.4 ]]

[1.00274789 1.00284818 1. ]

Bayesian uncertainty quantification also works exactly as before. The Bayesian posterior for is:

where and denotes the determinant of .

As before, it is better to compute the logarithm of the posterior (as opposed to the posterior directly) because of numerical issues.

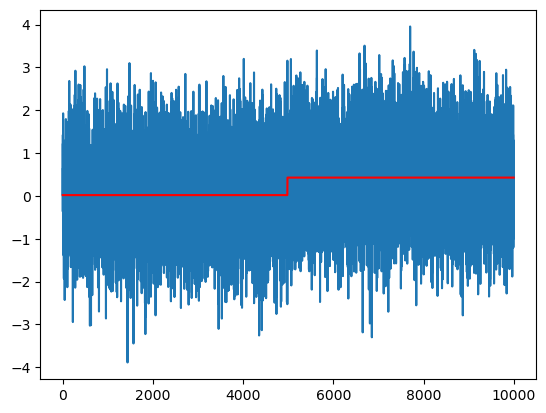

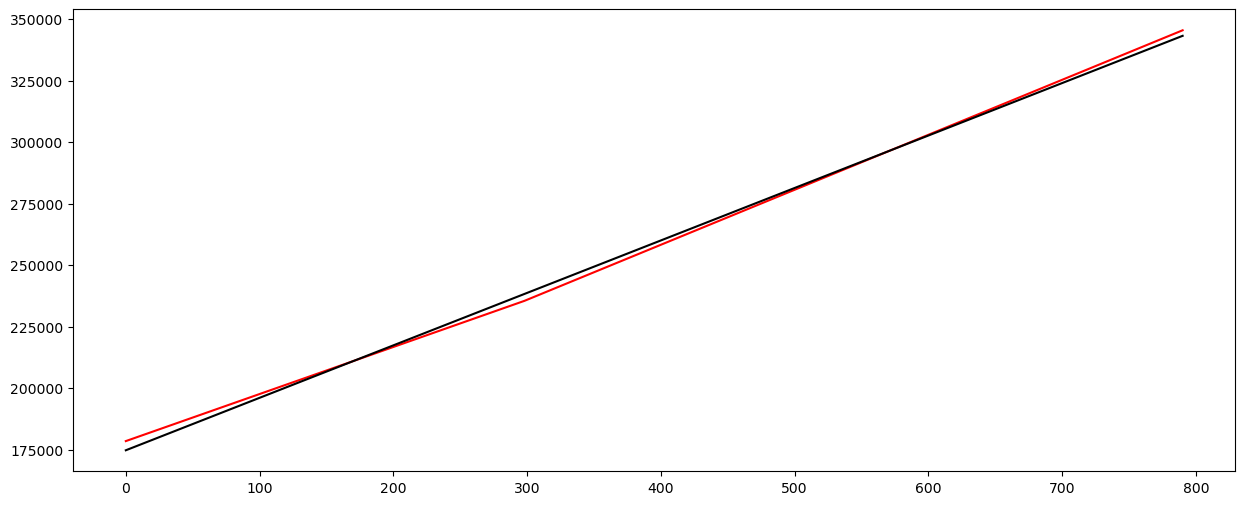

# Plot the fitted values:

plt.plot(y)

plt.plot(md.fittedvalues, color = 'red')

def logpost(c):

x = np.arange(1, n+1)

xc = (x > c).astype(float)

X = np.column_stack([np.ones(n), xc])

p = X.shape[1]

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

sgn, log_det = np.linalg.slogdet(np.dot(X.T, X))

# sgn gives the sign of the determinant (in our case, this should 1)

# log_det gives the logarithm of the absolute value of the determinant

logval = ((p - n) / 2) * np.log(rss) - 0.5 * log_det

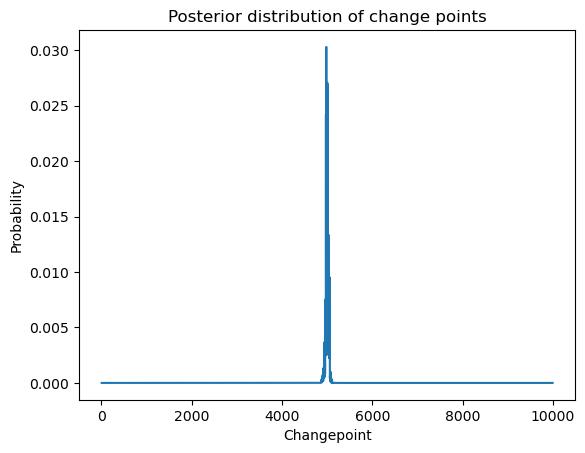

return logvalallcvals = np.arange(5, n - 4)

logpostvals = np.array([logpost(c) for c in allcvals])

plt.plot(allcvals, logpostvals)

plt.xlabel('Changepoint')

plt.ylabel('Value')

plt.title('Logarithm of (unnormalized) posterior density')

plt.show()

# this plot looks similar to the RSS plot

Let us exponentiate the log-posterior values to get the posterior.

postvals_unnormalized = np.exp(logpostvals - np.max(logpostvals))

postvals = postvals_unnormalized / (np.sum(postvals_unnormalized))

plt.plot(allcvals, postvals)

plt.xlabel('Changepoint')

plt.ylabel('Probability')

plt.title('Posterior distribution of change points')

plt.show()

We draw posterior samples exactly as before.

N = 1000

cpostsamples = rng.choice(allcvals, N, replace = True, p = postvals)

post_samples = np.zeros(shape = (N, 4))

post_samples[:, 0] = cpostsamples

for i in range(N):

c = cpostsamples[i]

x = np.arange(1, n + 1)

xc = (x > c).astype(float)

X = np.column_stack([np.ones(n), xc])

p = X.shape[1]

md_c = sm.OLS(y, X).fit()

chirv = rng.chisquare(df = n - p)

sig_sample = np.sqrt(np.sum(md_c.resid ** 2) / chirv) # posterior sample from sigma

post_samples[i, (p + 1)] = sig_sample

covmat = (sig_sample ** 2) * np.linalg.inv(np.dot(X.T, X))

beta_sample = rng.multivariate_normal(mean = md_c.params, cov = covmat, size = 1)

post_samples[i, 1:(p + 1)] = beta_sample

print(post_samples)[[5.01400000e+03 1.61186008e-02 4.15836357e-01 9.94694854e-01]

[5.07500000e+03 2.21172885e-02 4.02259427e-01 9.96874208e-01]

[4.93100000e+03 6.69816740e-03 4.29948362e-01 9.91129525e-01]

...

[5.03000000e+03 1.52941625e-02 4.27556266e-01 1.00020949e+00]

[5.01400000e+03 2.52471690e-02 3.94416060e-01 9.92312337e-01]

[4.99600000e+03 1.99135692e-02 3.90096091e-01 1.00377472e+00]]

These posterior samples can be used to visualize the uncertainty in the fitted functions.

x = np.arange(1, n + 1)

plt.figure(figsize = (15, 6))

plt.plot(y)

for i in range(N):

c = cpostsamples[i]

b0 = post_samples[i, 1]

b1 = post_samples[i, 2]

ftdval = b0 + b1 * (x > c).astype(float)

plt.plot(ftdval, color = 'red')

# Summary of the posterior samples:

pd.DataFrame(post_samples).describe()Change of Slope (or Broken Stick Regression)¶

Now consider the model:

Here denotes the positive part function (also known as the ReLU function). This model has parameters and (as well as σ). This is a nonlinear regression model because of the presence of the parameter . If a known value is plugged in for , we would revert to a linear regression model.

This model states that, until the time point , the slope parameter equals . After , the slope parameter becomes .

Let us fit this model to the US population dataset that we previously used in the class.

uspop = pd.read_csv("POPTHM-Jan2025FRED.csv")

y = uspop['POPTHM']

n = len(y)

plt.plot(y)

plt.show()

For obtaining the MLEs, we proceed exactly as before. The RSS is now given by:

def rss(c):

x = np.arange(1, n + 1)

xc = ((x > c).astype(float)) * (x - c)

X = np.column_stack([np.ones(n), x, xc])

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

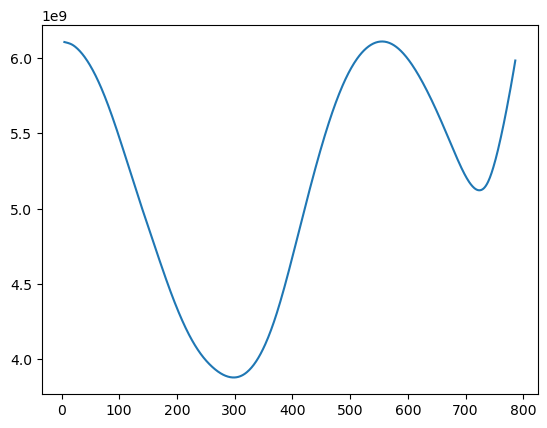

return rssallcvals = np.arange(5, n - 4) # we are ignoring a few points at the beginning and at the end

rssvals = np.array([rss(c) for c in allcvals])

plt.plot(allcvals, rssvals)

plt.show()

c_hat = allcvals[np.argmin(rssvals)]

print(c_hat)298

# Estimates of other parameters:

x = np.arange(1, n + 1)

c = c_hat

xc = ((x > c).astype(float)) * (x - c)

X = np.column_stack([np.ones(n), x, xc])

md = sm.OLS(y, X).fit()

print(md.params)

# this gives estimates of beta_0, beta_1, beta_2 (this is a real dataset so there are no true parameters)

rss_chat = np.sum(md.resid ** 2)

sigma_mle = np.sqrt(rss_chat / n)

sigma_unbiased = np.sqrt((rss_chat) / (n - 3))

print(np.array([sigma_mle, sigma_unbiased]))

# sig is the true value of sigma which generated the dataconst 178331.892380

x1 191.173841

x2 32.346330

dtype: float64

[2214.41969096 2218.63095249]

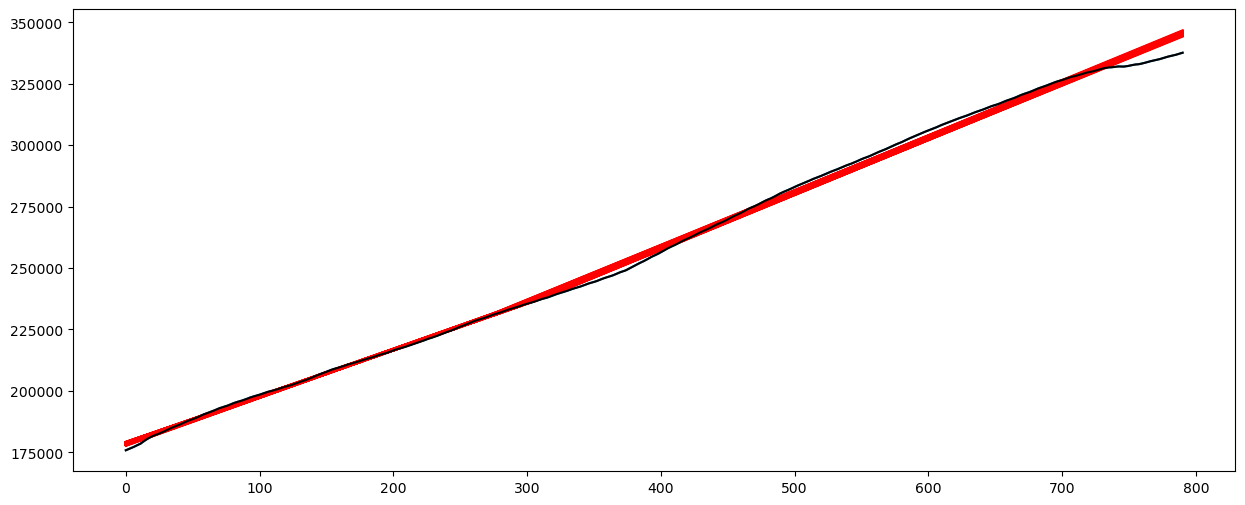

# Plot fitted values

X_linmod = np.column_stack([np.ones(n), x])

linmod = sm.OLS(y, X_linmod).fit()

plt.figure(figsize = (15, 6))

# Plot this broken regression fitted values along with linear model fitted values

plt.plot(y, color = 'None')

plt.plot(md.fittedvalues, color = 'red')

plt.plot(linmod.fittedvalues, color = 'black')

plt.show()

# Bayesian log posterior

def logpost(c):

x = np.arange(1, n + 1)

xc = ((x > c).astype(float)) * (x - c)

X = np.column_stack([np.ones(n), x, xc])

p = X.shape[1]

md = sm.OLS(y, X).fit()

rss = np.sum(md.resid ** 2)

sgn, log_det = np.linalg.slogdet(np.dot(X.T, X)) #sgn gives the sign of the determinant (in our case, this should 1)

# log_det gives the logarithm of the absolute value of the determinant

logval = ((p - n) / 2) * np.log(rss) - 0.5 * log_det

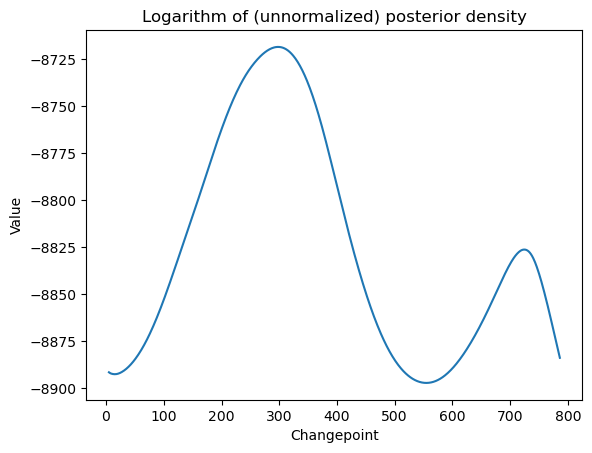

return logvalallcvals = np.arange(5, n - 4)

logpostvals = np.array([logpost(c) for c in allcvals])

plt.plot(allcvals, logpostvals)

plt.xlabel('Changepoint')

plt.ylabel('Value')

plt.title('Logarithm of (unnormalized) posterior density')

plt.show()

# this plot looks similar to the RSS plot

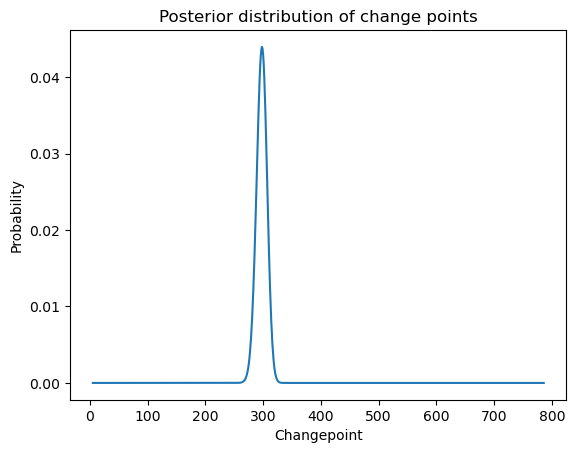

postvals_unnormalized = np.exp(logpostvals - np.max(logpostvals))

postvals = postvals_unnormalized / (np.sum(postvals_unnormalized))

plt.plot(allcvals, postvals)

plt.xlabel('Changepoint')

plt.ylabel('Probability')

plt.title('Posterior distribution of change points')

plt.show()

# Drawing posterior samples:

N = 2000

cpostsamples = rng.choice(allcvals, N, replace = True, p = postvals)

post_samples = np.zeros(shape = (N, 5))

post_samples[:, 0] = cpostsamples

for i in range(N):

f = cpostsamples[i]

x = np.arange(1, n + 1)

xc = ((x > c).astype(float)) * (x - c)

X = np.column_stack([np.ones(n), x, xc])

p = X.shape[1]

md_c = sm.OLS(y, X).fit()

chirv = rng.chisquare(df = n - p)

sig_sample = np.sqrt(np.sum(md_c.resid ** 2) / chirv) # posterior sample from sigma

post_samples[i, (p + 1)] = sig_sample

covmat = (sig_sample ** 2) * np.linalg.inv(np.dot(X.T, X))

beta_sample = rng.multivariate_normal(mean = md_c.params, cov = covmat, size = 1)

post_samples[i, 1:(p + 1)] = beta_sample

print(post_samples)[[3.02000000e+02 1.78679868e+05 1.89836033e+02 3.42169717e+01

2.22133894e+03]

[2.85000000e+02 1.78325271e+05 1.91295418e+02 3.24809108e+01

2.25595672e+03]

[2.83000000e+02 1.78395919e+05 1.90801617e+02 3.30139821e+01

2.25832125e+03]

...

[2.90000000e+02 1.78563273e+05 1.90179251e+02 3.33837727e+01

2.15566567e+03]

[2.90000000e+02 1.78292844e+05 1.91629020e+02 3.21929322e+01

2.29143430e+03]

[3.04000000e+02 1.78581825e+05 1.90525611e+02 3.27431913e+01

2.21071767e+03]]

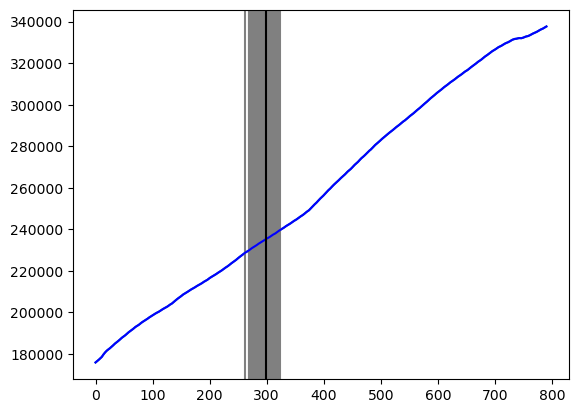

# Let us plot the posterior samples for c on the original plot:

plt.plot(y)

for i in range(N):

plt.axvline(x = cpostsamples[i], color = 'gray')

plt.plot(y, color = 'blue')

plt.axvline(x = c_hat, color = 'black')

plt.show()

# Plot the fitted values for the different posterior draws:

x = np.arange(1, n + 1)

plt.figure(figsize = (15, 6))

plt.plot(y)

for i in range(N):

c = cpostsamples[i]

b0 = post_samples[i, 1]

b1 = post_samples[i, 2]

b2 = post_samples[i, 3]

ftdval = b0 + b1 * x + b2 * ((x > c).astype(float)) * (x - c)

plt.plot(ftdval, color = 'red')

plt.plot(y, color = 'black')

plt.show()

#Summary of the posterior samples:

pd.DataFrame(post_samples).describe()