import numpy as np

import statsmodels.api as sm

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.graphics.tsaplots import plot_acf, plot_pacf

from statsmodels.tsa.ar_model import AutoReg

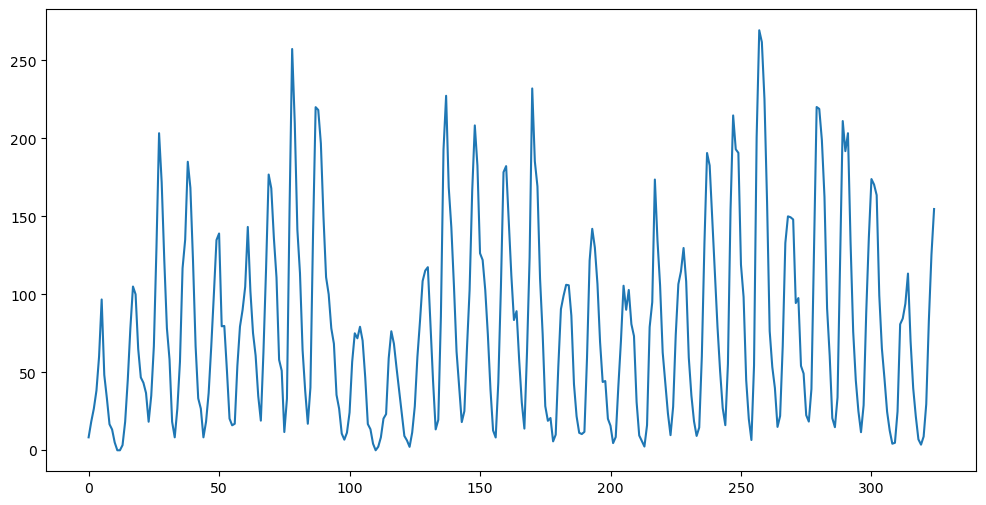

from statsmodels.tsa.stattools import pacfLet us illustrate the concepts of ACF and PACF through the sunspots dataset.

sunspots = pd.read_csv('SN_y_tot_V2.0.csv', header=None, sep=';')

print(sunspots.head())

y = sunspots.iloc[:, 1].values

n = len(y)

plt.figure(figsize = (12, 6))

plt.plot(y)

plt.show() 0 1 2 3 4

0 1700.5 8.3 -1.0 -1 1

1 1701.5 18.3 -1.0 -1 1

2 1702.5 26.7 -1.0 -1 1

3 1703.5 38.3 -1.0 -1 1

4 1704.5 60.0 -1.0 -1 1

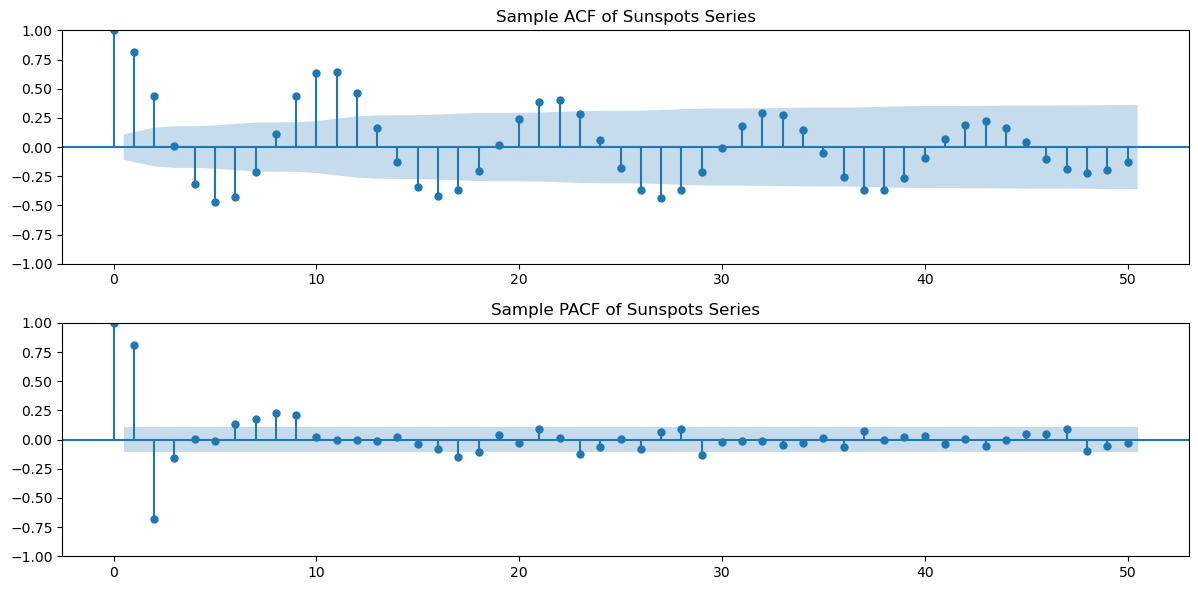

h_max = 50

fig, axes = plt.subplots(nrows = 2, ncols = 1, figsize = (12, 6))

plot_acf(y, lags = h_max, ax = axes[0])

axes[0].set_title("Sample ACF of Sunspots Series")

plot_pacf(y, lags = h_max, ax = axes[1])

axes[1].set_title("Sample PACF of Sunspots Series")

plt.tight_layout()

plt.show()

The sample ACF has an oscillatory pattern, and the sample autocorrelations only become negligible after lag 12 (even though sample autocorrelations at lags 15, 16, 17 also seem nontrivial). This suggests that MA models may not be appropriate for this dataset. The partial PACF clearly has two big spikes at lags 1 and 2, and then mostly small. This suggests that an AR(2) model might be appropriate. One can also consider the sample PACF at lags 7, 8, 9 to be nonnegligible. In this case, we can try to fit AR(9) to the data.

Calculation of PACF¶

Let us see how the PACF is calculated. The definition of PACF() is the estimate of when the AR() model is fit to the data. Let us check if this is indeed the case.

def sample_pacf(y, p_max):

pautocorr = []

for p in range(1, p_max + 1):

armd = AutoReg(y, lags = p).fit() # fitting the AR(p) model

phi_p = armd.params[-1] # taking the last estimated coefficient

pautocorr.append(phi_p)

return pautocorrLet us now compare these values with the values given by an inbuilt function for pacf.

p_max = 50

pacf_vals = sample_pacf(y, p_max)

pacf_vals_sm = pacf(y, nlags=p_max, method='ols') # these pacf values start with the value 1 at lag 0.

print(np.column_stack([pacf_vals, pacf_vals_sm[1:]])) [[ 0.81814243 0.81814243]

[-0.69646032 -0.69646032]

[-0.14551566 -0.14551566]

[ 0.01078091 0.01078091]

[-0.00988486 -0.00988486]

[ 0.13721057 0.13721057]

[ 0.20129653 0.20129653]

[ 0.22159369 0.22159369]

[ 0.21768779 0.21768779]

[ 0.01979271 0.01979271]

[ 0.01220908 0.01220908]

[-0.01159196 -0.01159196]

[ 0.00638536 0.00638536]

[ 0.04363913 0.04363913]

[-0.05535382 -0.05535382]

[-0.07389671 -0.07389671]

[-0.16269894 -0.16269894]

[-0.12338723 -0.12338723]

[ 0.05099077 0.05099077]

[-0.02507587 -0.02507587]

[ 0.09908343 0.09908343]

[ 0.01560164 0.01560164]

[-0.12666585 -0.12666585]

[-0.07148407 -0.07148407]

[ 0.00513059 0.00513059]

[-0.11203047 -0.11203047]

[ 0.05033772 0.05033772]

[ 0.07062661 0.07062661]

[-0.13345508 -0.13345508]

[-0.0234795 -0.0234795 ]

[-0.00607123 -0.00607123]

[-0.01538124 -0.01538124]

[-0.02963781 -0.02963781]

[-0.00909349 -0.00909349]

[-0.01330015 -0.01330015]

[-0.05143092 -0.05143092]

[ 0.06104478 0.06104478]

[-0.00216343 -0.00216343]

[ 0.02005769 0.02005769]

[ 0.04261196 0.04261196]

[-0.02111046 -0.02111046]

[-0.00650194 -0.00650194]

[-0.03813196 -0.03813196]

[-0.00691118 -0.00691118]

[ 0.05843472 0.05843472]

[ 0.04757612 0.04757612]

[ 0.09585197 0.09585197]

[-0.12695263 -0.12695263]

[-0.02920994 -0.02920994]

[-0.03182224 -0.03182224]]

See that the two sets of sample pacf values coincide exactly.

Regression and Partial Correlation¶

As already mentioned, the sample pacf at lag is defined as the estimate of when the AR() model is fit to the data. From this definition, it makes sense that these values can be used for AR model selection. However why does this definition have the name “partial correlation”. In statistics, the partial correlation between two variables given a bunch of other variables is defined as the correlation between the residuals and . Below we calculate the partial correlation in terms of residuals, and then see how it is related to our definition of the sample pacf.

Let us first fix a value of and then compute the sample PACF at lag directly (without using inbuilt library functions).

p = 9 # fix a value of p

yreg = y[p: ] # these are the response values in the autoregression

Xmat = np.ones((n - p, 1)) # this will be the design matrix (X) in the autoregression

for j in range(1, p + 1):

col = y[p - j:n - j].reshape(-1, 1)

Xmat = np.column_stack([Xmat, col])

armod = sm.OLS(yreg, Xmat).fit()

print(armod.params)

pacf_lag_p = armod.params[-1]

print(pacf_lag_p)[ 1.27820089e+01 1.17199169e+00 -4.20714239e-01 -1.35002174e-01

1.01279088e-01 -6.66442555e-02 1.83774039e-03 1.51264798e-02

-4.29674964e-02 2.17687794e-01]

0.2176877941518983

Here the response variable yreg denotes . The first column of Xmat is the column of ones, the second column is , third column is and so on with the last column being .

print(np.column_stack([yreg, Xmat]))

print(y)[[ 13.3 1. 16.7 ... 26.7 18.3 8.3]

[ 5. 1. 13.3 ... 38.3 26.7 18.3]

[ 0. 1. 5. ... 60. 38.3 26.7]

...

[ 83.2 1. 29.6 ... 69.8 113.3 94. ]

[125.5 1. 83.2 ... 39.8 69.8 113.3]

[154.7 1. 125.5 ... 21.7 39.8 69.8]]

[ 8.3 18.3 26.7 38.3 60. 96.7 48.3 33.3 16.7 13.3 5. 0.

0. 3.3 18.3 45. 78.3 105. 100. 65. 46.7 43.3 36.7 18.3

35. 66.7 130. 203.3 171.7 121.7 78.3 58.3 18.3 8.3 26.7 56.7

116.7 135. 185. 168.3 121.7 66.7 33.3 26.7 8.3 18.3 36.7 66.7

100. 134.8 139. 79.5 79.7 51.2 20.3 16. 17. 54. 79.3 90.

104.8 143.2 102. 75.2 60.7 34.8 19. 63. 116.3 176.8 168. 136.

110.8 58. 51. 11.7 33. 154.2 257.3 209.8 141.3 113.5 64.2 38.

17. 40.2 138.2 220. 218.2 196.8 149.8 111. 100. 78.2 68.3 35.5

26.7 10.7 6.8 11.3 24.2 56.7 75. 71.8 79.2 70.3 46.8 16.8

13.5 4.2 0. 2.3 8.3 20.3 23.2 59. 76.3 68.3 52.9 38.5

24.2 9.2 6.3 2.2 11.4 28.2 59.9 83. 108.5 115.2 117.4 80.8

44.3 13.4 19.5 85.8 192.7 227.3 168.7 143. 105.5 63.3 40.3 18.1

25.1 65.8 102.7 166.3 208.3 182.5 126.3 122. 102.7 74.1 39. 12.7

8.2 43.4 104.4 178.3 182.2 146.6 112.1 83.5 89.2 57.8 30.7 13.9

62.8 123.6 232. 185.3 169.2 110.1 74.5 28.3 18.9 20.7 5.7 10.

53.7 90.5 99. 106.1 105.8 86.3 42.4 21.8 11.2 10.4 11.8 59.5

121.7 142. 130. 106.6 69.4 43.8 44.4 20.2 15.7 4.6 8.5 40.8

70.1 105.5 90.1 102.8 80.9 73.2 30.9 9.5 6. 2.4 16.1 79.

95. 173.6 134.6 105.7 62.7 43.5 23.7 9.7 27.9 74. 106.5 114.7

129.7 108.2 59.4 35.1 18.6 9.2 14.6 60.2 132.8 190.6 182.6 148.

113. 79.2 50.8 27.1 16.1 55.3 154.3 214.7 193. 190.7 118.9 98.3

45. 20.1 6.6 54.2 200.7 269.3 261.7 225.1 159. 76.4 53.4 39.9

15. 22. 66.8 132.9 150. 149.4 148. 94.4 97.6 54.1 49.2 22.5

18.4 39.3 131. 220.1 218.9 198.9 162.4 91. 60.5 20.6 14.8 33.9

123. 211.1 191.8 203.3 133. 76.1 44.9 25.1 11.6 28.9 88.3 136.3

173.9 170.4 163.6 99.3 65.3 45.8 24.7 12.6 4.2 4.8 24.9 80.8

84.5 94. 113.3 69.8 39.8 21.7 7. 3.6 8.8 29.6 83.2 125.5

154.7]

Now let us calculate the same sample PACF value using partial correlation. Specifically, we will calculate the partial correlation between the variables and given all the intervening variables . For this, we first need to calculate the residual of given as well as the residual of given .

The residual of given is calculated as follows.

armod1 = sm.OLS(yreg, Xmat[:, :-1]).fit()

res_y = armod1.residThe residual of given is calculated as follows.

armod2 = sm.OLS(Xmat[:, -1], Xmat[:, :-1]).fit()

res_x = armod2.residThe sample correlation between these two residuals is the sample PACF at lag :

pcorr = np.corrcoef(res_y, res_x)[0, 1]

print(pcorr, pacf_lag_p)0.21950061866415008 0.2176877941518983

Note that this is basically the same as the Sample PACF at lag . The minor discrepancy occurs because the two residuals do not have exactly the same variance. More precisely, the regression coefficient in a multiple linear regression of on a set of covariates satisfies the following formula:

var_res_y = np.var(res_y)

var_res_x = np.var(res_x)

print(var_res_y, var_res_x)570.4359419392475 579.9762661182705

These residual variances are similar but not exactly the same. This explains why the correlation between the residuals does not exactly equal the fitted regression coefficient . But if we multiply the partial correlation by the ratio of the standard deviations, we recover the sample pacf at lag p (i.e., ) exactly.

reg_coeff = pcorr * np.sqrt(var_res_y/var_res_x)

print(reg_coeff, pacf_lag_p)0.2176877941518987 0.2176877941518983

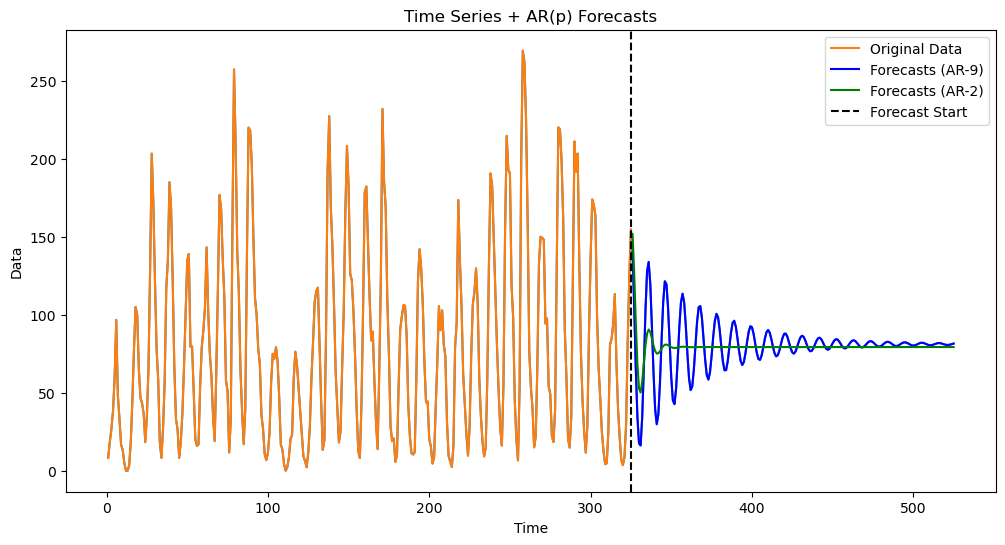

Fitting AR(9) and AR(2) to the Sunspots Data¶

Based on the PACF plot, the two models which make sense for the sunspots data are AR(2) and AR(9). Let us fit both of these models to the data.

armd_2 = AutoReg(y, lags=2).fit()

armd_9 = AutoReg(y, lags=9).fit() k = 200

fcast_9 = armd_9.get_prediction(start=n, end=n+k-1)

fcast_mean_9 = fcast_9.predicted_mean

fcast_2 = armd_2.get_prediction(start=n, end=n+k-1)

fcast_mean_2 = fcast_2.predicted_meanyhat_9 = np.concatenate([y, fcast_mean_9])

yhat_2 = np.concatenate([y, fcast_mean_2])

plt.figure(figsize=(12, 6))

time_all = np.arange(1, n + k + 1)

plt.plot(time_all, yhat_9, color='C0')

plt.plot(range(1, n + 1), y, label='Original Data', color='C1')

plt.plot(range(n + 1, n + k + 1), fcast_mean_9, label='Forecasts (AR-9)', color='blue')

plt.plot(range(n + 1, n + k + 1), fcast_mean_2, label='Forecasts (AR-2)', color='green')

plt.axvline(x=n, color='black', linestyle='--', label='Forecast Start')

plt.xlabel('Time')

plt.ylabel('Data')

plt.title('Time Series + AR(p) Forecasts')

plt.legend()

plt.show()

Visually the AR(9) predictions appear better at least for the initial few predictions.

AR models and Stationarity¶

For an AR(p) model , its characteristic polynomial is given by . This is a polynomial of degree so it has roots . Some of the roots may be complex (even though are all real). If the modulus of is strictly larger than 1 for every , then the corresponding AR(p) model is called causal and stationary.

Let us check stationary of the fitted AR(2) and AR(9) models that we just fitted to the sunspots dataset.

print(armd_2.summary()) AutoReg Model Results

==============================================================================

Dep. Variable: y No. Observations: 325

Model: AutoReg(2) Log Likelihood -1505.524

Method: Conditional MLE S.D. of innovations 25.588

Date: Thu, 10 Apr 2025 AIC 3019.048

Time: 15:55:25 BIC 3034.159

Sample: 2 HQIC 3025.080

325

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const 24.4561 2.372 10.308 0.000 19.806 29.106

y.L1 1.3880 0.040 34.685 0.000 1.310 1.466

y.L2 -0.6965 0.040 -17.423 0.000 -0.775 -0.618

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 0.9965 -0.6655j 1.1983 -0.0937

AR.2 0.9965 +0.6655j 1.1983 0.0937

-----------------------------------------------------------------------------

The roots and their modulus are actually given as part of the summary output. We can also compute them as follows.

# characteristic polynomial

coeffs = [(-1) * armd_2.params[2], (-1) * armd_2.params[1], 1] # these are the coefficients of the characteristic polynomial

roots = np.roots(coeffs) # these are the roots of the characteristic polynomial

print(coeffs)

print(roots)

magnitudes = np.abs(roots)

print(magnitudes)[np.float64(0.6964603222695152), np.float64(-1.3880327164912336), 1]

[0.99649088+0.66546067j 0.99649088-0.66546067j]

[1.19826206 1.19826206]

As the magnitude of both roots is strictly larger than 1, this is a stationary AR(2) model (it is also causal).

Now let us check whether the fitted AR(9) model is causal and stationary.

print(armd_9.summary()) AutoReg Model Results

==============================================================================

Dep. Variable: y No. Observations: 325

Model: AutoReg(9) Log Likelihood -1443.314

Method: Conditional MLE S.D. of innovations 23.301

Date: Thu, 10 Apr 2025 AIC 2908.628

Time: 15:55:25 BIC 2949.941

Sample: 9 HQIC 2925.132

325

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const 12.7820 4.002 3.194 0.001 4.938 20.626

y.L1 1.1720 0.055 21.338 0.000 1.064 1.280

y.L2 -0.4207 0.086 -4.905 0.000 -0.589 -0.253

y.L3 -0.1350 0.089 -1.525 0.127 -0.309 0.039

y.L4 0.1013 0.088 1.149 0.251 -0.072 0.274

y.L5 -0.0666 0.088 -0.757 0.449 -0.239 0.106

y.L6 0.0018 0.088 0.021 0.983 -0.171 0.174

y.L7 0.0151 0.088 0.173 0.863 -0.157 0.187

y.L8 -0.0430 0.085 -0.508 0.612 -0.209 0.123

y.L9 0.2177 0.054 3.999 0.000 0.111 0.324

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 1.0701 -0.0000j 1.0701 -0.0000

AR.2 0.8499 -0.5728j 1.0249 -0.0944

AR.3 0.8499 +0.5728j 1.0249 0.0944

AR.4 0.4186 -1.0985j 1.1756 -0.1921

AR.5 0.4186 +1.0985j 1.1756 0.1921

AR.6 -0.4824 -1.2224j 1.3142 -0.3098

AR.7 -0.4824 +1.2224j 1.3142 0.3098

AR.8 -1.2225 -0.4668j 1.3086 -0.4419

AR.9 -1.2225 +0.4668j 1.3086 0.4419

-----------------------------------------------------------------------------

All roots have magnitudes strictly larger than 1 so this is also a causal and stationary model. These roots and their magnitudes can also be computed as follows.

# Extract coefficients for the characteristic polynomial

coeffs = [(-1) * armd_9.params[i] for i in range(9, 0, -1)] # Reverse order: lags 9→1

coeffs.append(1)

roots = np.roots(coeffs)

magnitudes = np.abs(roots)

print(magnitudes)[1.30855577 1.30855577 1.31416814 1.31416814 1.17555576 1.17555576

1.07010924 1.02490237 1.02490237]

These magnitudes coincide with the values in the table although the order in which they are listed in the table may be different (there is no default ordering for displaying the roots).